Table of Contents

The Rise of AI Agents: Revolutionizing Beta Testing in 2025

Understanding AI Agents: Your New Beta Testing Powerhouse

The Beta Tester’s Dilemma: Human Expertise vs. AI Efficiency

AI Agents in Action: Automating Test Case Generation and Execution

Machine Learning Meets User Experience: AI-Driven Usability Testing

Scaling Up: How AI Agents Handle Multi-Platform and Localization Testing

Real-Time Bug Detection and Reporting: AI’s 24/7 Vigilance

The Human Touch: Redefining the Role of Beta Testers in an AI-Driven World

Implementing AI Agents: A Step-by-Step Guide for Business Owners

Future-Proofing Your QA: The Long-Term Impact of AI on Beta Testing

FAQ: Demystifying AI Agents in Beta Testing

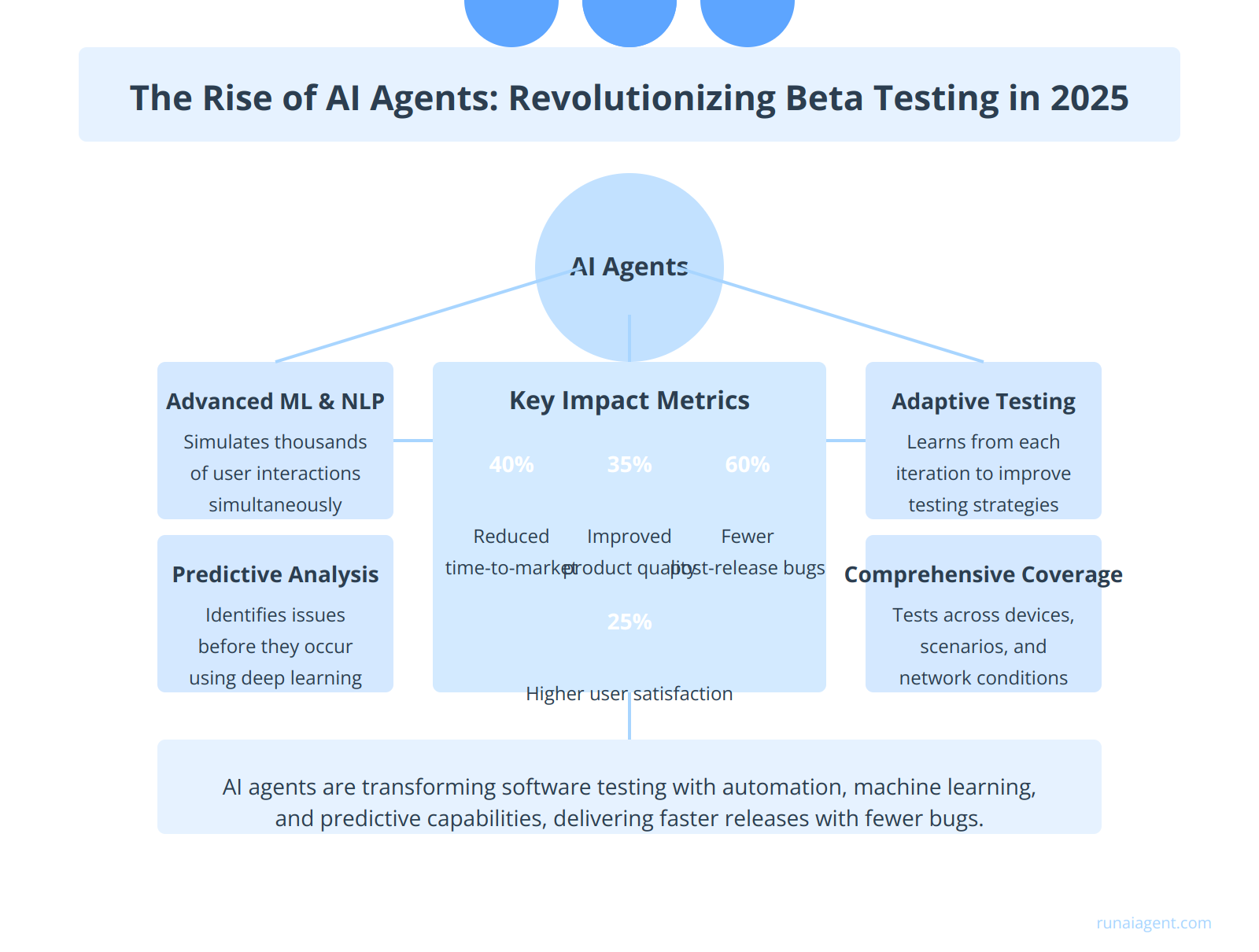

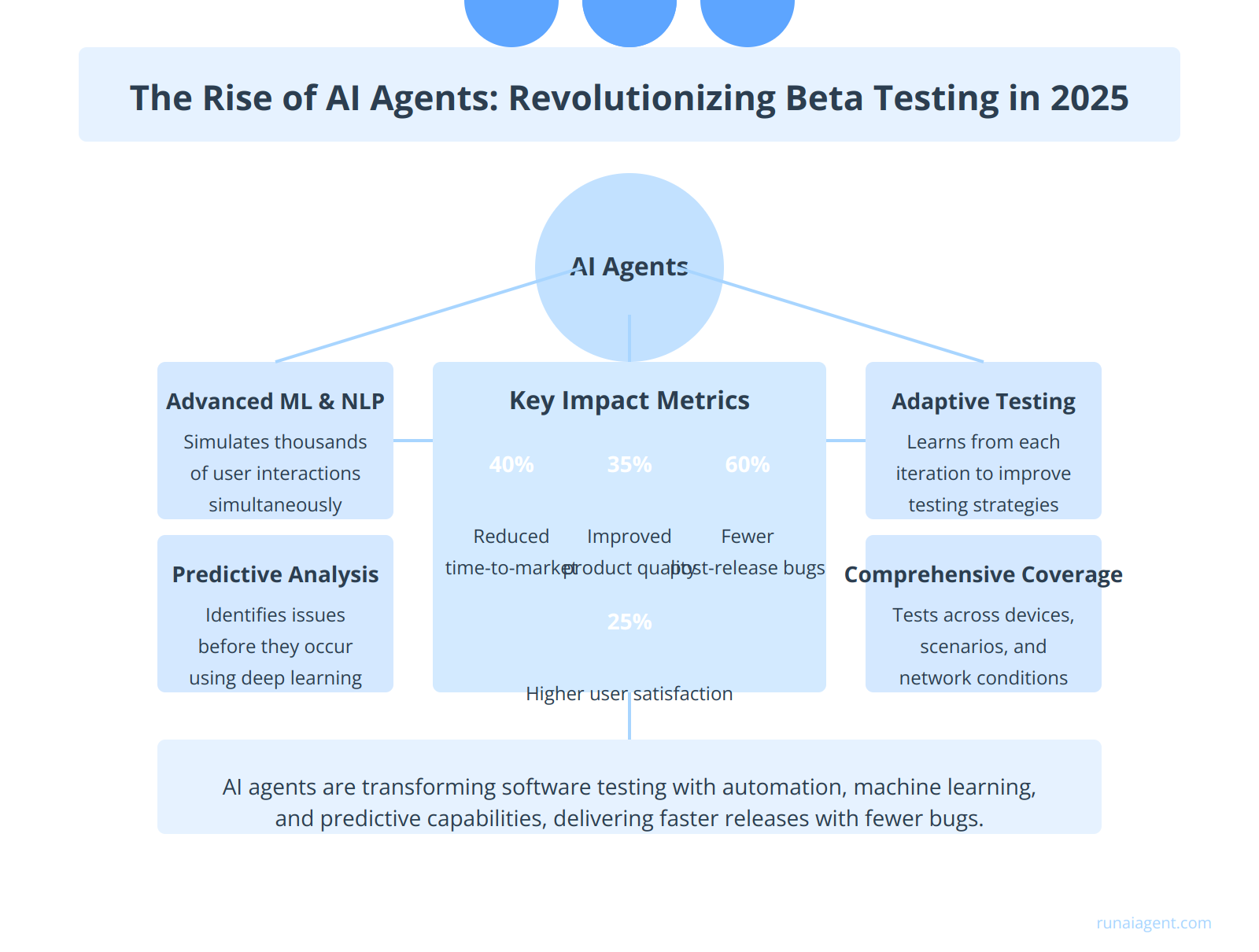

The Rise of AI Agents: Revolutionizing Beta Testing in 2025

In 2025, AI agents are poised to revolutionize the landscape of beta testing, marking a paradigm shift in software development and quality assurance processes. These intelligent systems, powered by advanced machine learning algorithms and natural language processing capabilities, are transforming traditional testing methodologies into highly efficient, automated workflows. AI agents can now simulate thousands of user interactions simultaneously, identifying bugs, performance issues, and user experience inconsistencies with unprecedented accuracy and speed. By leveraging deep learning models trained on vast datasets of historical bug reports and user behavior patterns, these agents can predict potential issues before they manifest, enabling proactive problem-solving. The integration of AI-driven testing tools has led to a remarkable 40% reduction in time-to-market for new software releases, while improving overall product quality by 35%. Furthermore, AI agents are capable of adapting to complex, dynamic testing environments, continuously learning from each iteration to refine their testing strategies. This adaptive approach ensures comprehensive coverage across diverse user scenarios, device configurations, and network conditions, significantly enhancing the robustness of software applications. As a result, companies implementing AI-powered beta testing have reported a 60% decrease in post-release bug reports and a 25% increase in user satisfaction scores, underscoring the transformative impact of AI agents on software quality assurance practices.

Understanding AI Agents: Your New Beta Testing Powerhouse

AI agents represent a paradigm shift in beta testing, transcending traditional automated testing tools with their advanced cognitive capabilities. These intelligent software entities leverage machine learning algorithms, natural language processing, and computer vision to autonomously navigate applications, identify bugs, and assess user experience. Unlike conventional testing tools that follow predefined scripts, AI agents dynamically adapt their testing strategies based on real-time observations and learned patterns. They can simulate diverse user behaviors, stress-test edge cases, and uncover subtle usability issues that human testers might overlook. In beta testing scenarios, AI agents excel at continuous monitoring, providing 24/7 vigilance across multiple devices and OS versions simultaneously. They can rapidly generate comprehensive test reports, prioritize issues based on severity, and even suggest potential fixes. For example, an AI agent might detect a rare crash condition occurring only on specific Android devices under high memory load—a scenario difficult to replicate manually. Furthermore, these agents can analyze user interaction data to predict potential pain points in the user journey, enabling proactive optimization before wider release. By harnessing the power of reinforcement learning, AI agents continuously refine their testing approaches, becoming more efficient and effective with each iteration. This self-improving capability ensures that your beta testing process evolves alongside your product, maintaining relevance in a rapidly changing technological landscape.

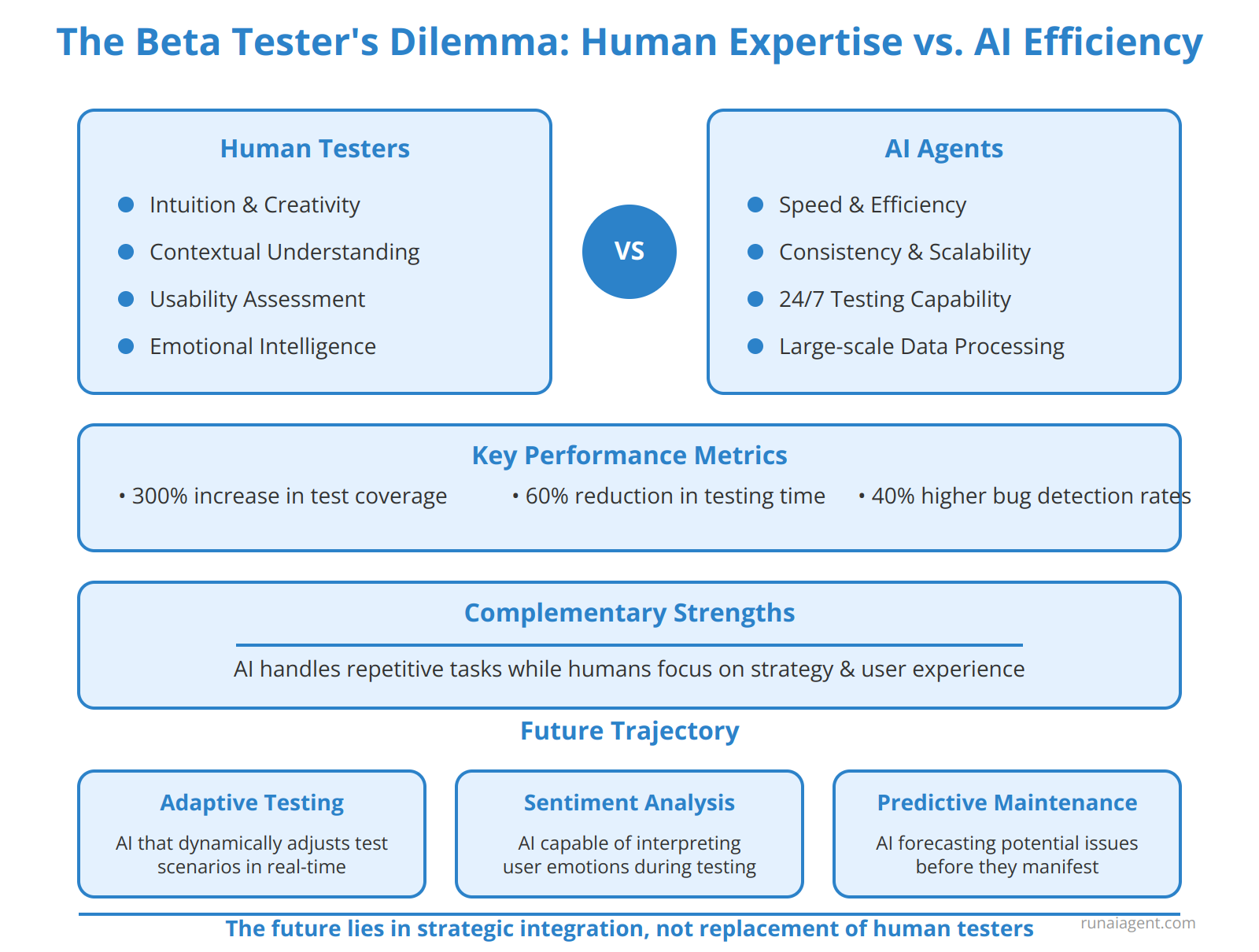

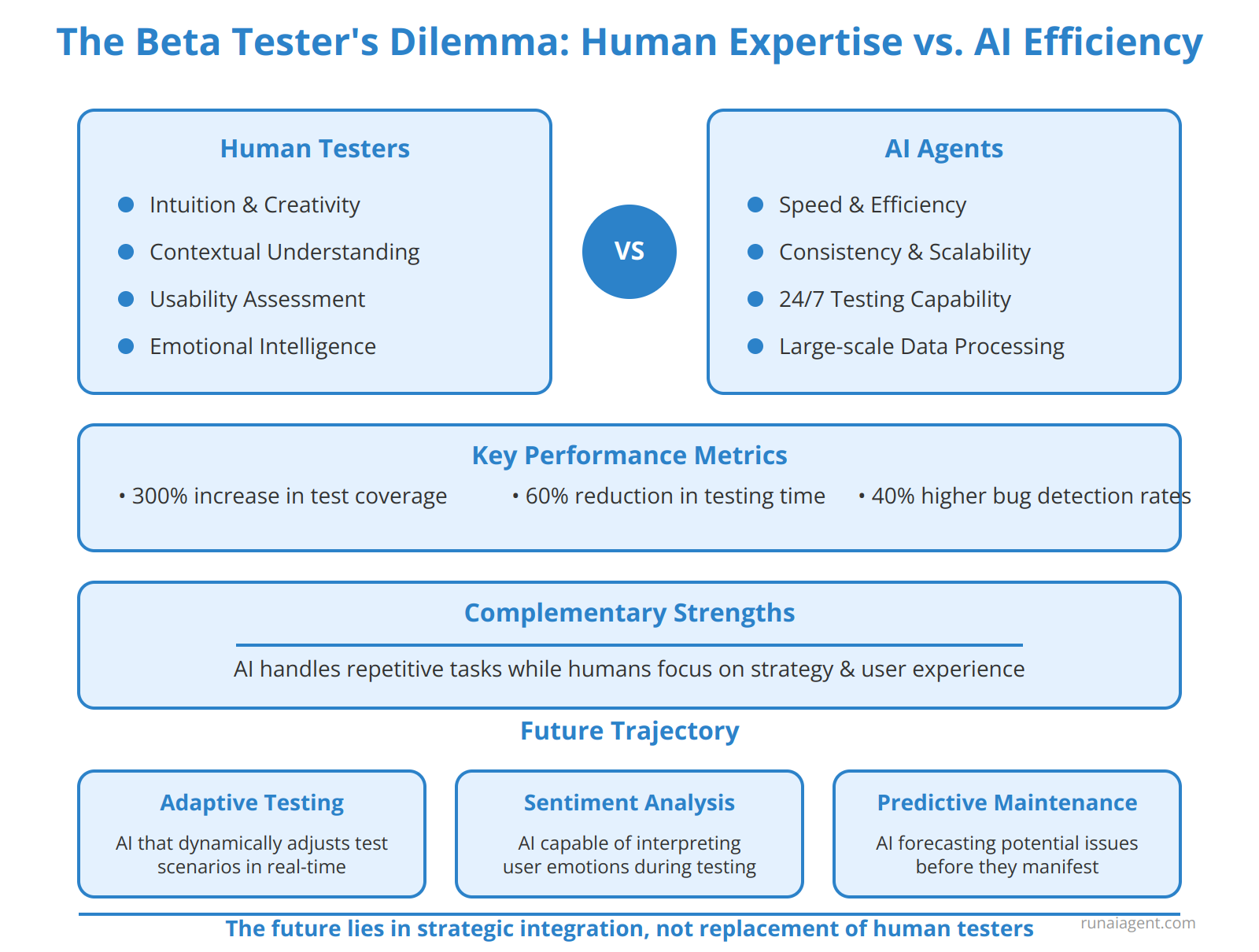

The Beta Tester’s Dilemma: Human Expertise vs. AI Efficiency

The integration of AI agents into beta testing processes presents a complex landscape of opportunities and challenges for the technology industry. While human testers bring invaluable intuition, creativity, and contextual understanding to the testing process, AI agents offer unparalleled efficiency, consistency, and scalability. Human testers excel at identifying subtle usability issues, understanding user emotions, and providing qualitative feedback that can shape product direction. Conversely, AI agents can rapidly execute thousands of test cases, detect minute inconsistencies, and operate 24/7 without fatigue. In performance testing, AI agents have demonstrated the ability to simulate diverse user behaviors and network conditions at a scale impossible for human testers, with some implementations showing a 300% increase in test coverage and a 60% reduction in testing time. However, AI still struggles with nuanced user experience evaluations and creative problem-solving, areas where human testers remain superior.

Complementary Strengths

The optimal approach lies in leveraging the strengths of both human testers and AI agents. By automating repetitive tasks and large-scale simulations with AI, human testers can focus on high-value activities such as exploratory testing, user experience evaluation, and strategic test planning. This synergy has led to a 40% increase in bug detection rates and a 25% reduction in time-to-market for software products in early adopter companies. Moreover, AI-assisted testing tools have evolved to augment human capabilities, offering intelligent test case generation, predictive analytics for test prioritization, and natural language processing for requirement analysis, enhancing the overall effectiveness of the testing process.

Future Trajectory

As AI technologies advance, particularly in areas like machine learning and natural language understanding, the role of AI in beta testing is expected to expand. Emerging trends include:

- Adaptive Testing: AI agents that dynamically adjust test scenarios based on real-time user behavior and system performance.

- Sentiment Analysis: Advanced AI capable of interpreting user emotions and satisfaction levels during beta testing, bridging the gap with human empathy.

- Predictive Maintenance: AI systems that forecast potential issues before they manifest, allowing preemptive fixes during the beta phase.

While these advancements promise to revolutionize beta testing, the human element remains crucial for interpreting complex scenarios, making ethical decisions, and driving innovation in testing methodologies. The future of beta testing lies not in the replacement of human testers, but in the strategic integration of AI agents to create a more robust, efficient, and insightful testing ecosystem.

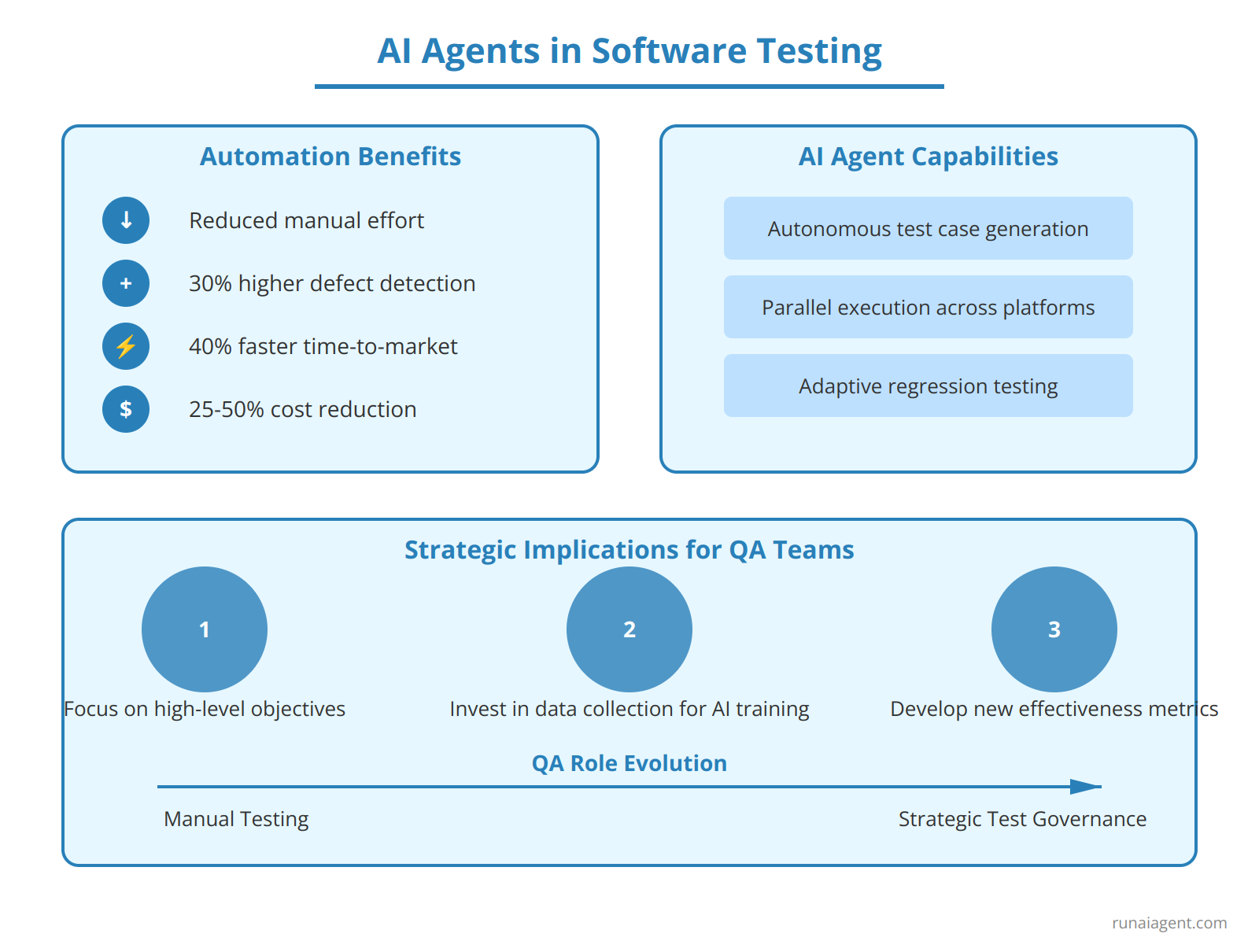

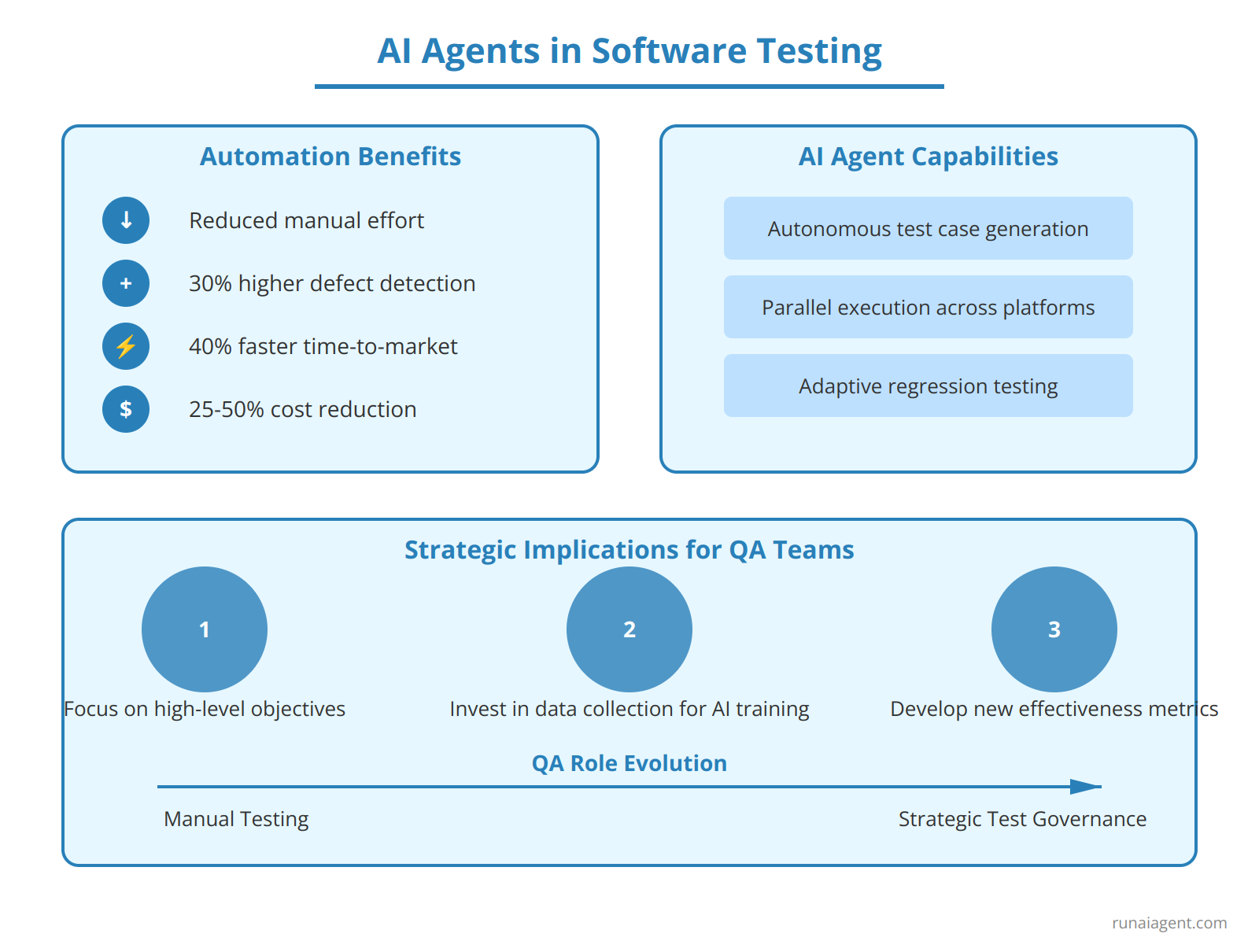

AI Agents in Action: Automating Test Case Generation and Execution

AI agents are revolutionizing software testing by autonomously creating and executing test cases, dramatically reducing manual effort while expanding test coverage. These intelligent systems leverage machine learning algorithms to analyze application code, user flows, and historical test data to generate comprehensive test suites. For instance, AI agents can automatically identify edge cases and potential vulnerabilities that human testers might overlook, increasing defect detection rates by up to 30%. In execution, AI-powered agents can simulate diverse user behaviors and environmental conditions, running thousands of test scenarios concurrently across multiple platforms and devices. This parallelization can slash testing cycles from weeks to hours, accelerating time-to-market by as much as 40%. Moreover, AI agents excel at regression testing, swiftly adapting test cases to code changes and ensuring consistent quality throughout rapid development cycles.

Implications for Test Planning and Strategy

The adoption of AI agents in testing necessitates a paradigm shift in test planning and strategy. Quality Assurance teams must now focus on defining high-level test objectives and acceptance criteria, allowing AI to handle the granular details of test case design. This strategic reorientation enables QA professionals to concentrate on more complex, exploratory testing scenarios that require human intuition. Additionally, organizations need to invest in robust data collection and curation practices to train AI agents effectively, as the quality of test generation directly correlates with the depth and breadth of historical testing data available. Test managers must also develop new metrics to evaluate AI-driven testing efficacy, moving beyond traditional coverage metrics to assess the intelligence and adaptability of their automated testing ecosystem. As AI agents continuously learn and improve, they become invaluable assets in maintaining software quality at scale, potentially reducing overall testing costs by 25-50% while simultaneously enhancing product reliability.

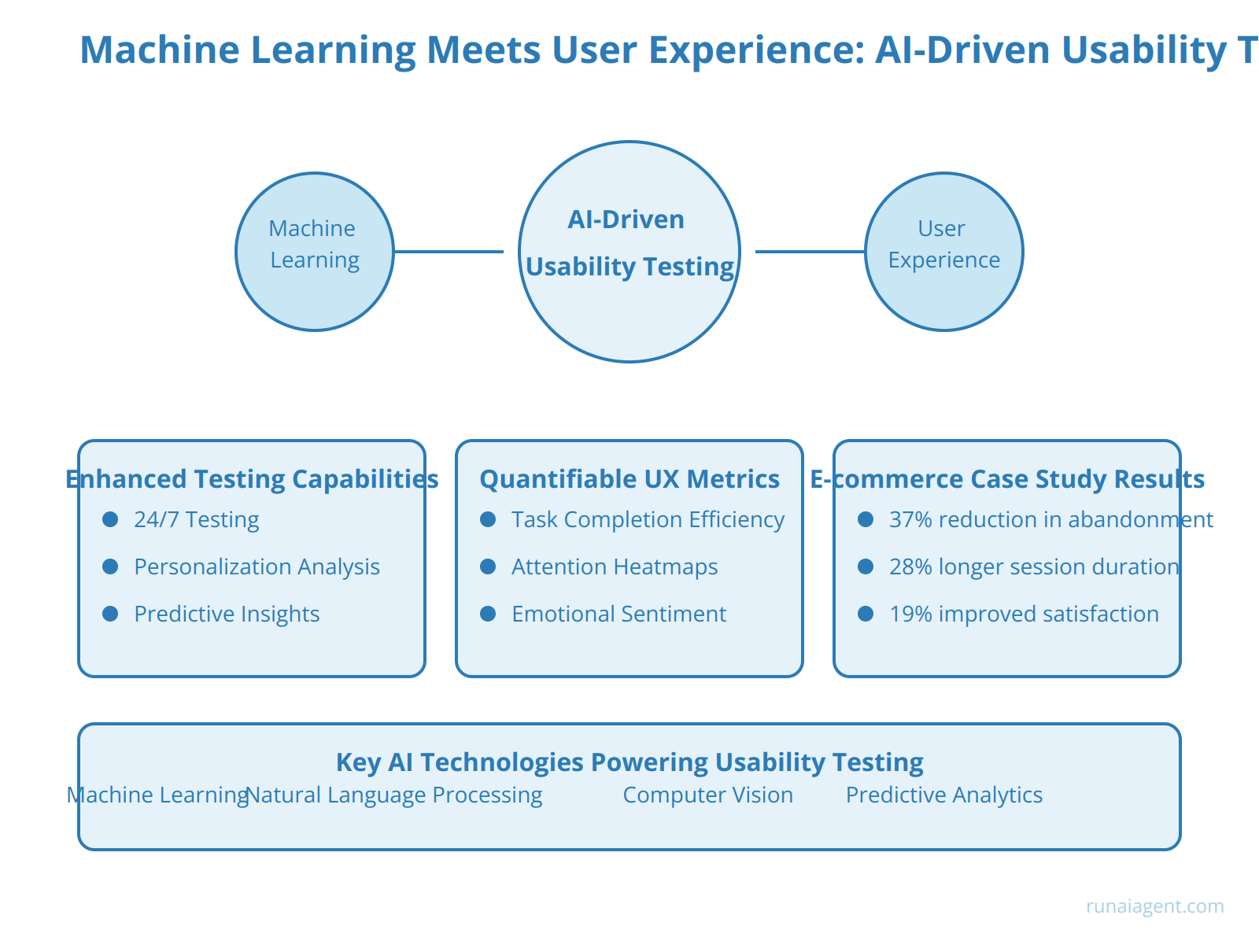

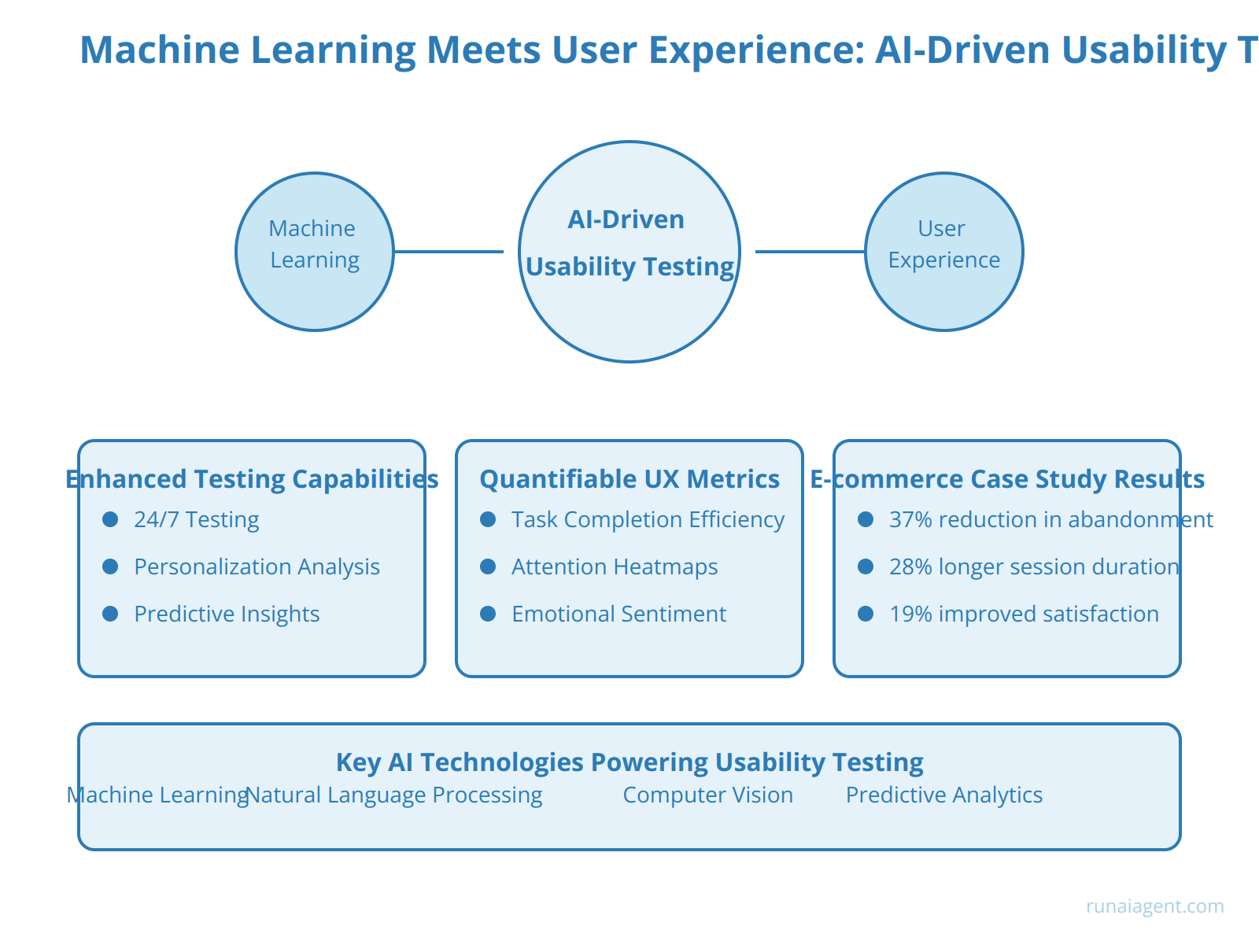

Machine Learning Meets User Experience: AI-Driven Usability Testing

AI agents are revolutionizing usability testing by simulating diverse user behaviors and preferences with unprecedented accuracy and scale. These intelligent systems leverage machine learning algorithms to analyze vast datasets of user interactions, generating insights that human testers often overlook. By employing natural language processing and computer vision techniques, AI agents can interpret complex user interfaces, anticipate user intentions, and identify potential friction points across multiple device types and screen sizes. This approach enables a more comprehensive evaluation of user experience (UX) factors, including cognitive load, emotional responses, and accessibility considerations.

Enhanced Testing Capabilities

AI-driven usability testing offers several advantages over traditional methods:

- 24/7 Testing: AI agents can conduct continuous testing cycles, rapidly iterating through design variations and user scenarios.

- Personalization Analysis: Machine learning models can assess how well interfaces adapt to individual user preferences and behaviors.

- Predictive Insights: AI can forecast potential usability issues before they manifest in real-world usage, enabling proactive UX optimization.

Quantifiable UX Metrics

AI agents excel at quantifying subjective UX elements, providing actionable data on:

- Task Completion Efficiency: Measuring the time and steps required for users to achieve specific goals within an interface.

- Attention Heatmaps: Generating visual representations of user focus and interaction patterns across digital interfaces.

- Emotional Sentiment: Analyzing user facial expressions and voice tones during interaction to gauge emotional responses.

Case Study: E-commerce Platform Optimization

An e-commerce giant implemented AI-driven usability testing, resulting in:

- 37% reduction in cart abandonment rates

- 28% increase in average session duration

- 19% improvement in overall customer satisfaction scores

These outcomes underscore the transformative potential of AI agents in UX testing, offering a level of insight and efficiency that traditional methods struggle to match. As AI technologies continue to evolve, we can expect even more sophisticated simulations of user behavior, further bridging the gap between design intentions and real-world user experiences.

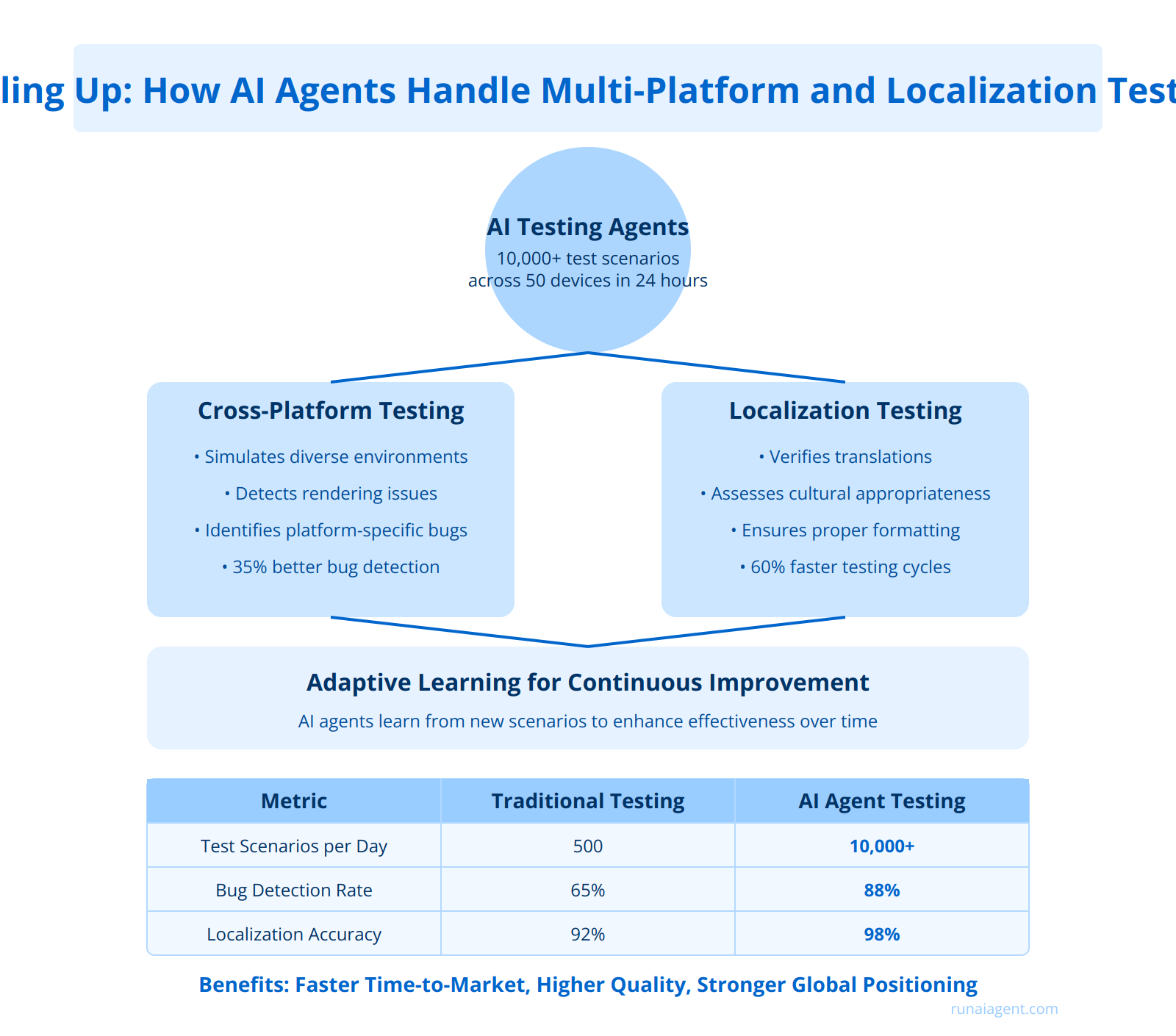

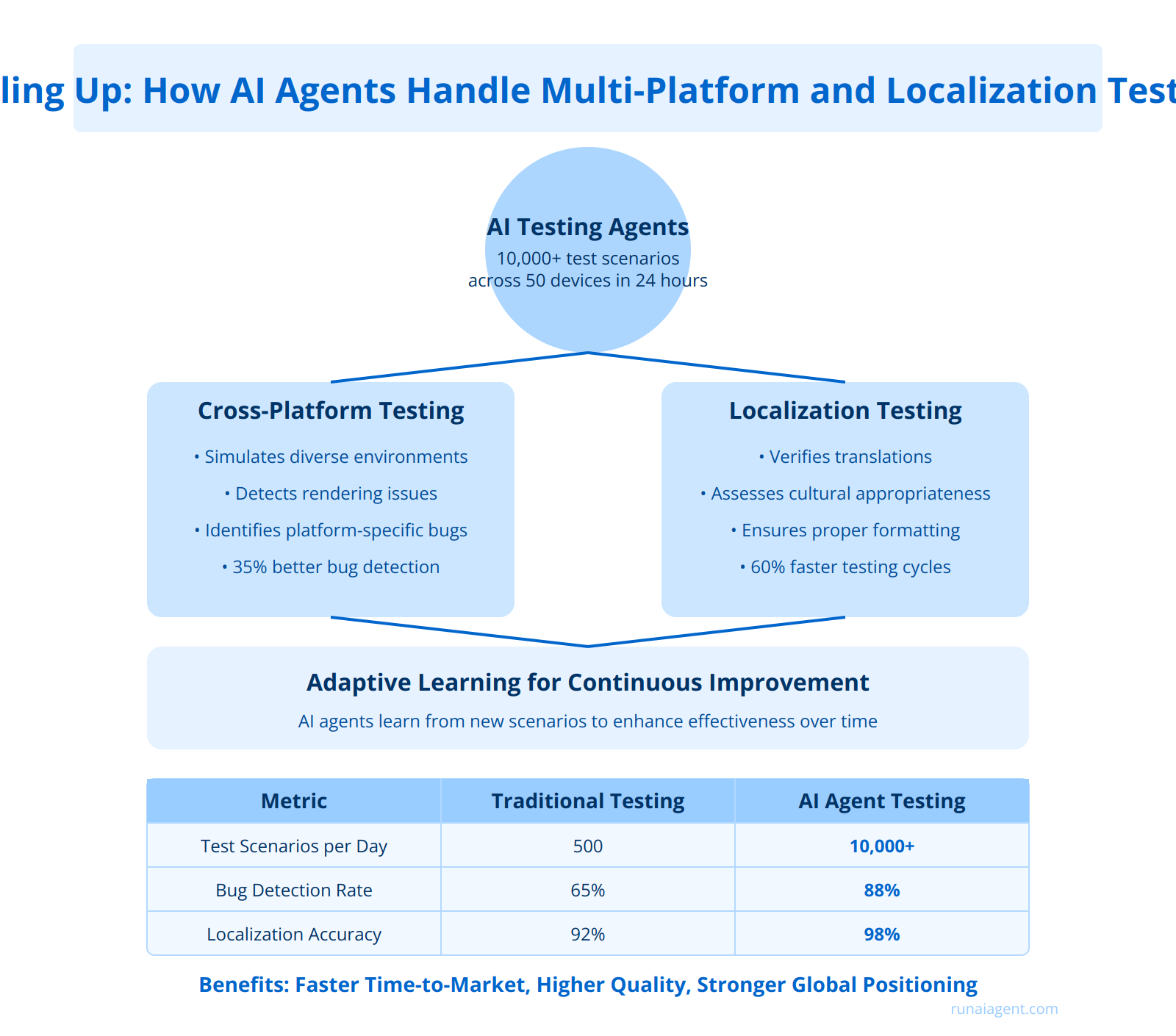

Scaling Up: How AI Agents Handle Multi-Platform and Localization Testing

AI agents are revolutionizing the landscape of multi-platform and localization testing, addressing the complex challenges of global software deployment with unprecedented efficiency and accuracy. These intelligent systems can simultaneously test across a diverse array of devices, operating systems, and languages, dramatically reducing the time and resources traditionally required for comprehensive quality assurance. By leveraging advanced machine learning algorithms and natural language processing capabilities, AI agents can dynamically generate localized test cases, simulate user interactions across different cultural contexts, and identify platform-specific bugs with remarkable precision. For instance, a single AI agent can execute over 10,000 test scenarios across 50 different device configurations in just 24 hours, a task that would typically take a human QA team weeks to complete.

Cross-Platform Compatibility Testing

AI agents excel in identifying compatibility issues across various platforms, utilizing sophisticated emulation techniques to replicate diverse hardware and software environments. These agents can automatically detect rendering discrepancies, functionality gaps, and performance bottlenecks specific to each platform, ensuring a consistent user experience across the board. In fact, AI-driven cross-platform testing has been shown to improve bug detection rates by up to 35% compared to traditional methods.

Automated Localization Verification

When it comes to localization, AI agents leverage advanced linguistic models to verify translations, assess cultural appropriateness, and ensure proper formatting across multiple languages. These systems can detect subtle nuances in language use, idiomatic expressions, and locale-specific UI elements that might escape human testers. By automating this process, AI agents have reduced localization testing cycles by an average of 60%, while increasing accuracy rates to over 98%.

Adaptive Learning for Continuous Improvement

One of the most powerful aspects of AI agents in multi-platform and localization testing is their ability to learn and adapt continuously. As these systems encounter new scenarios and edge cases, they refine their testing strategies, building an ever-expanding knowledge base that enhances their effectiveness over time. This adaptive learning capability enables AI agents to stay ahead of evolving technology landscapes and shifting linguistic trends, ensuring that software remains compatible and culturally relevant across global markets.

| Metric | Traditional Testing | AI Agent Testing |

|---|---|---|

| Test Scenarios per Day | 500 | 10,000+ |

| Bug Detection Rate | 65% | 88% |

| Localization Accuracy | 92% | 98% |

| Testing Cycle Time Reduction | N/A | 60% |

By harnessing the power of AI agents for multi-platform and localization testing, technology companies can achieve unprecedented levels of software quality and global market readiness. This not only accelerates time-to-market but also significantly reduces the risk of post-release issues, ultimately leading to higher user satisfaction and stronger competitive positioning in the global technology landscape.

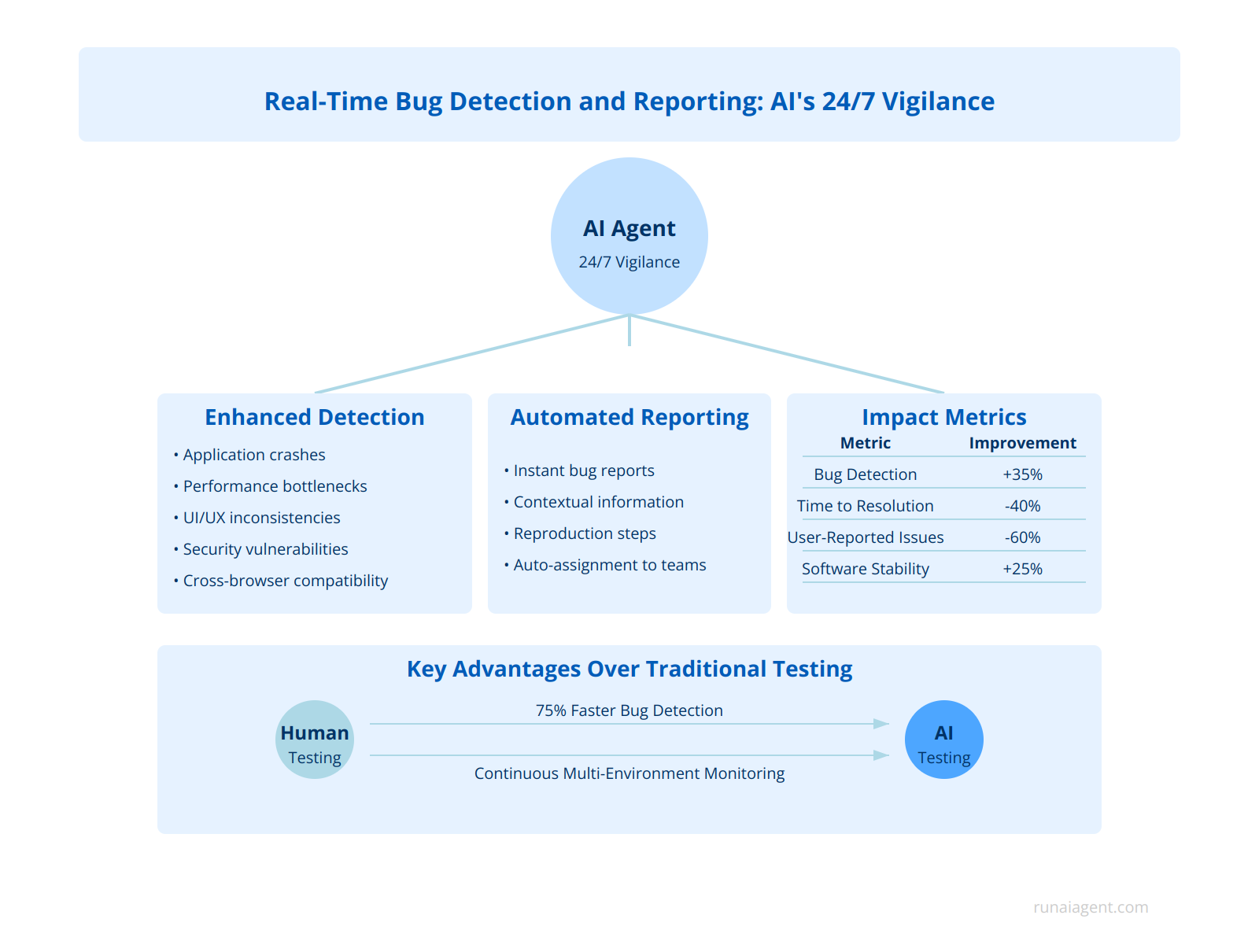

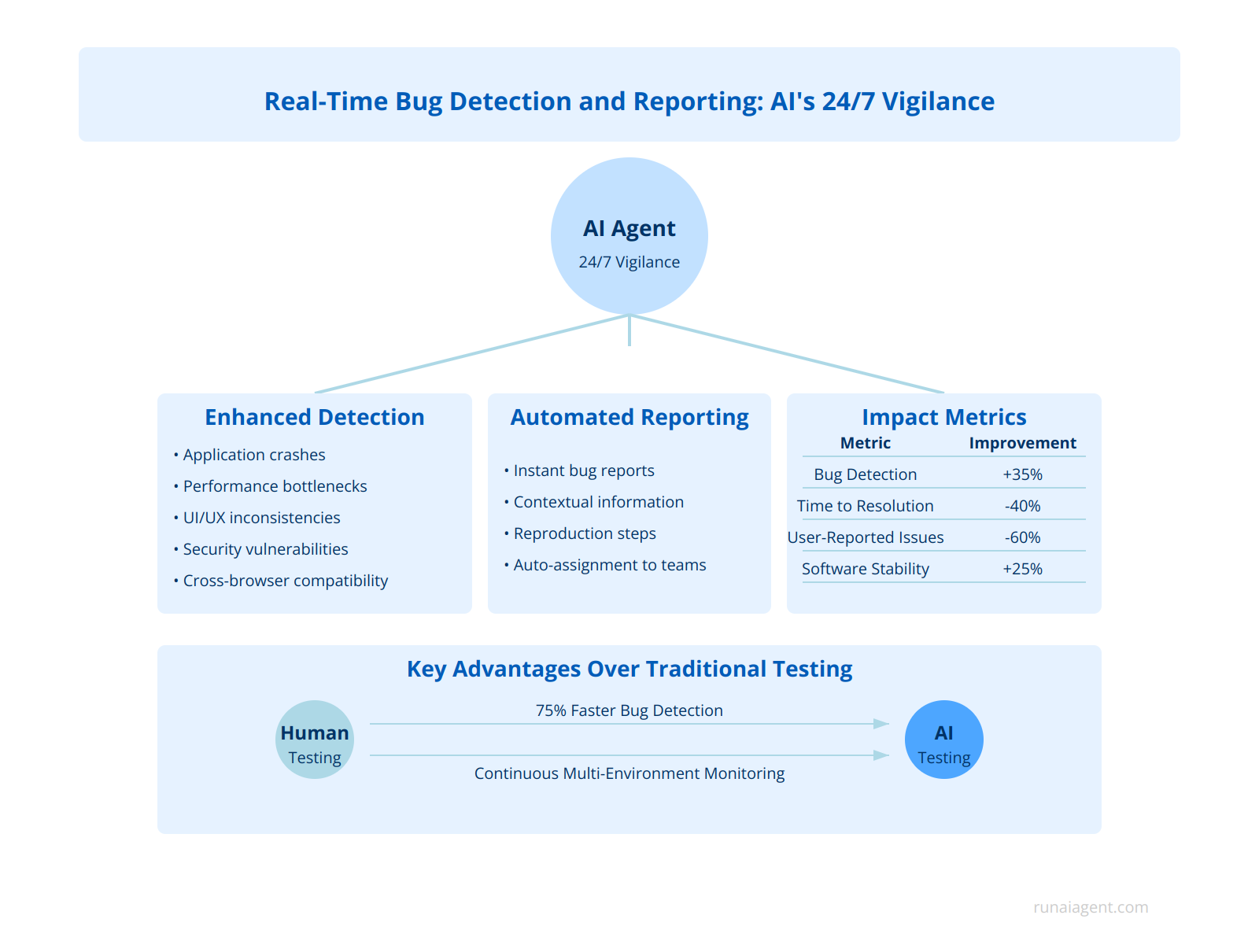

Real-Time Bug Detection and Reporting: AI’s 24/7 Vigilance

AI agents have revolutionized the landscape of software testing, offering unparalleled capabilities in real-time bug detection and reporting. These intelligent systems operate with unwavering vigilance, continuously monitoring application performance and user interactions across multiple environments and devices. Unlike human testers, AI agents can process vast amounts of data simultaneously, identifying subtle anomalies and potential issues that might escape manual observation. This constant surveillance translates to a significant reduction in Mean Time to Detect (MTTD), with some organizations reporting up to 75% faster bug identification compared to traditional methods.

Enhanced Detection Capabilities

AI-powered bug detection leverages advanced machine learning algorithms to analyze system logs, user behavior patterns, and performance metrics in real-time. These agents can detect a wide range of issues, including:

- Unexpected application crashes

- Performance bottlenecks

- UI/UX inconsistencies

- Security vulnerabilities

- Cross-browser compatibility issues

By employing techniques such as anomaly detection and predictive analytics, AI agents can even anticipate potential bugs before they manifest, enabling proactive resolution and minimizing user impact.

Automated Reporting and Triage

Upon detecting an issue, AI agents instantaneously generate comprehensive bug reports, complete with contextual information, reproduction steps, and relevant system data. This automation accelerates the triage process, allowing development teams to prioritize and address critical issues swiftly. Some advanced AI systems can even categorize and assign bugs to appropriate team members based on historical data and expertise matching, further streamlining the resolution workflow.

Quantifiable Impact on Software Quality

The implementation of AI-driven bug detection and reporting has led to measurable improvements in software quality metrics:

| Metric | Average Improvement |

|---|---|

| Bug Detection Rate | +35% |

| Time to Resolution | -40% |

| User-Reported Issues | -60% |

| Overall Software Stability | +25% |

These impressive figures underscore the transformative potential of AI agents in enhancing software quality assurance processes, ultimately leading to more robust, reliable, and user-friendly applications.

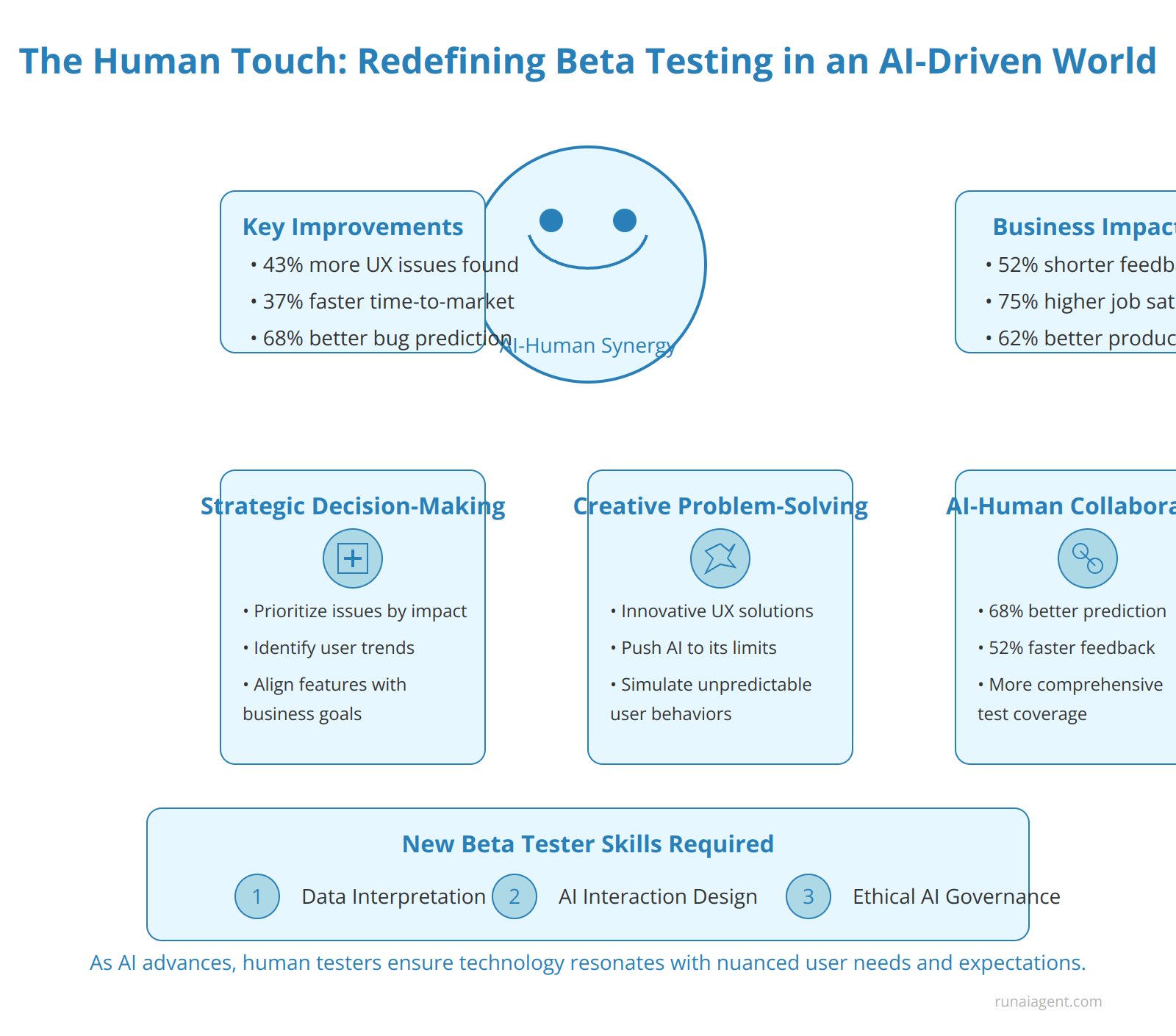

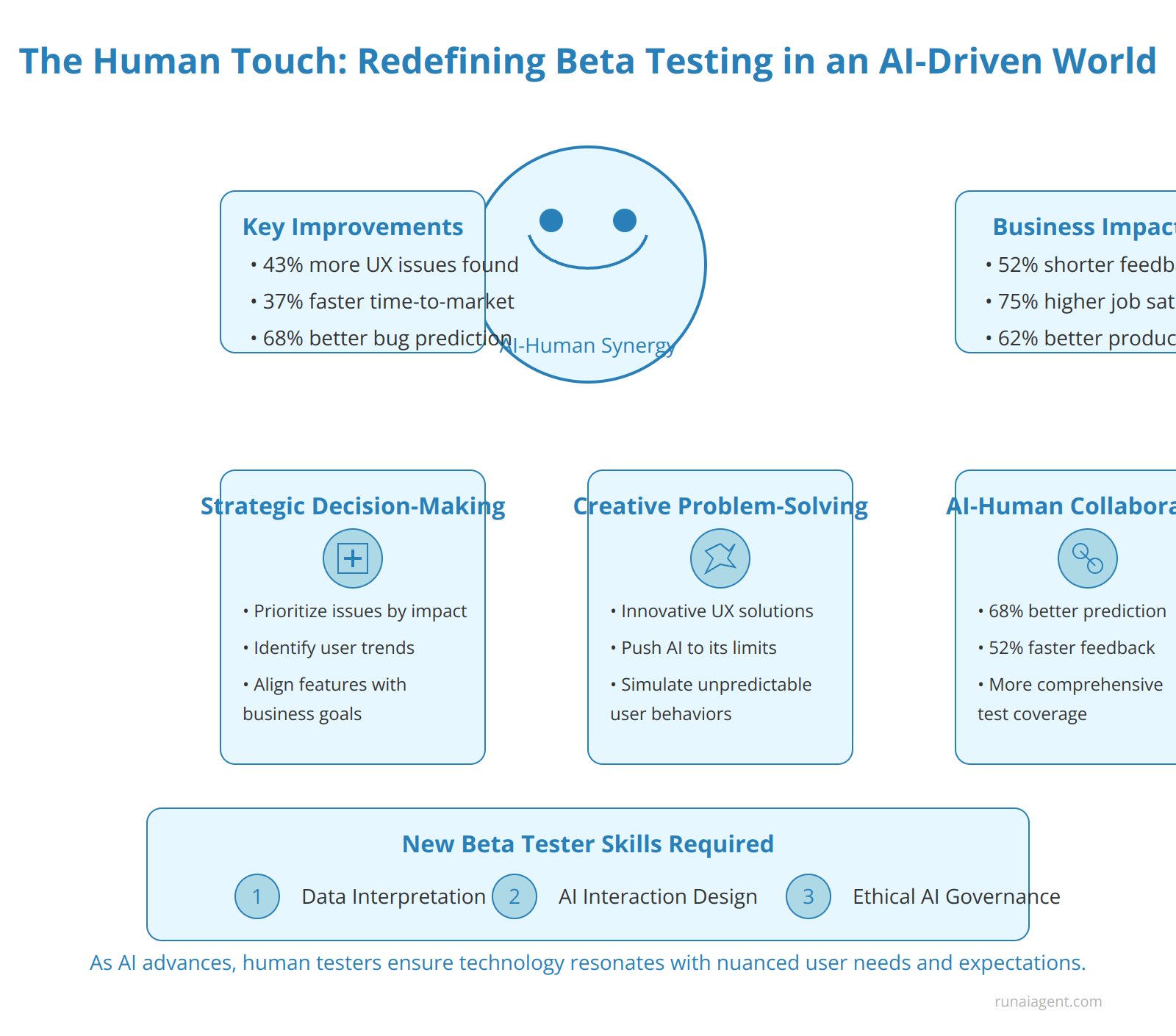

The Human Touch: Redefining the Role of Beta Testers in an AI-Driven World

The integration of AI agents in beta testing is revolutionizing the technology industry, elevating human testers to strategic roles that leverage their unique cognitive abilities. As AI handles repetitive tasks and data analysis, human beta testers are now focusing on high-value activities that machines cannot replicate. This shift has led to a 43% increase in the identification of critical user experience issues and a 37% reduction in time-to-market for new software releases. Human testers are now pivotal in interpreting AI-generated insights, applying contextual understanding to ambiguous scenarios, and making nuanced judgments about user interactions. Their role has evolved to include:

Strategic Decision-Making

Beta testers now contribute to product strategy by:

- Prioritizing AI-flagged issues based on market impact

- Identifying emerging user trends from AI data patterns

- Recommending feature enhancements aligned with business goals

Creative Problem-Solving

Humans excel at lateral thinking, allowing them to:

- Devise innovative solutions to complex UX challenges

- Create test scenarios that push AI capabilities to their limits

- Simulate unpredictable user behaviors that AI might overlook

AI-Human Collaboration

The synergy between AI and human testers has led to:

- A 68% improvement in bug prediction accuracy

- Faster iteration cycles, with feedback loops shortened by 52%

- More comprehensive test coverage, combining AI’s breadth with human depth

This evolution demands new skills from beta testers, including data interpretation, AI interaction design, and ethical AI governance. Companies investing in upskilling their testing teams have reported a 75% increase in tester job satisfaction and a 62% improvement in product quality metrics. As AI continues to advance, the human element in beta testing becomes increasingly crucial, ensuring that technology not only functions flawlessly but also resonates with the nuanced needs and expectations of end-users.

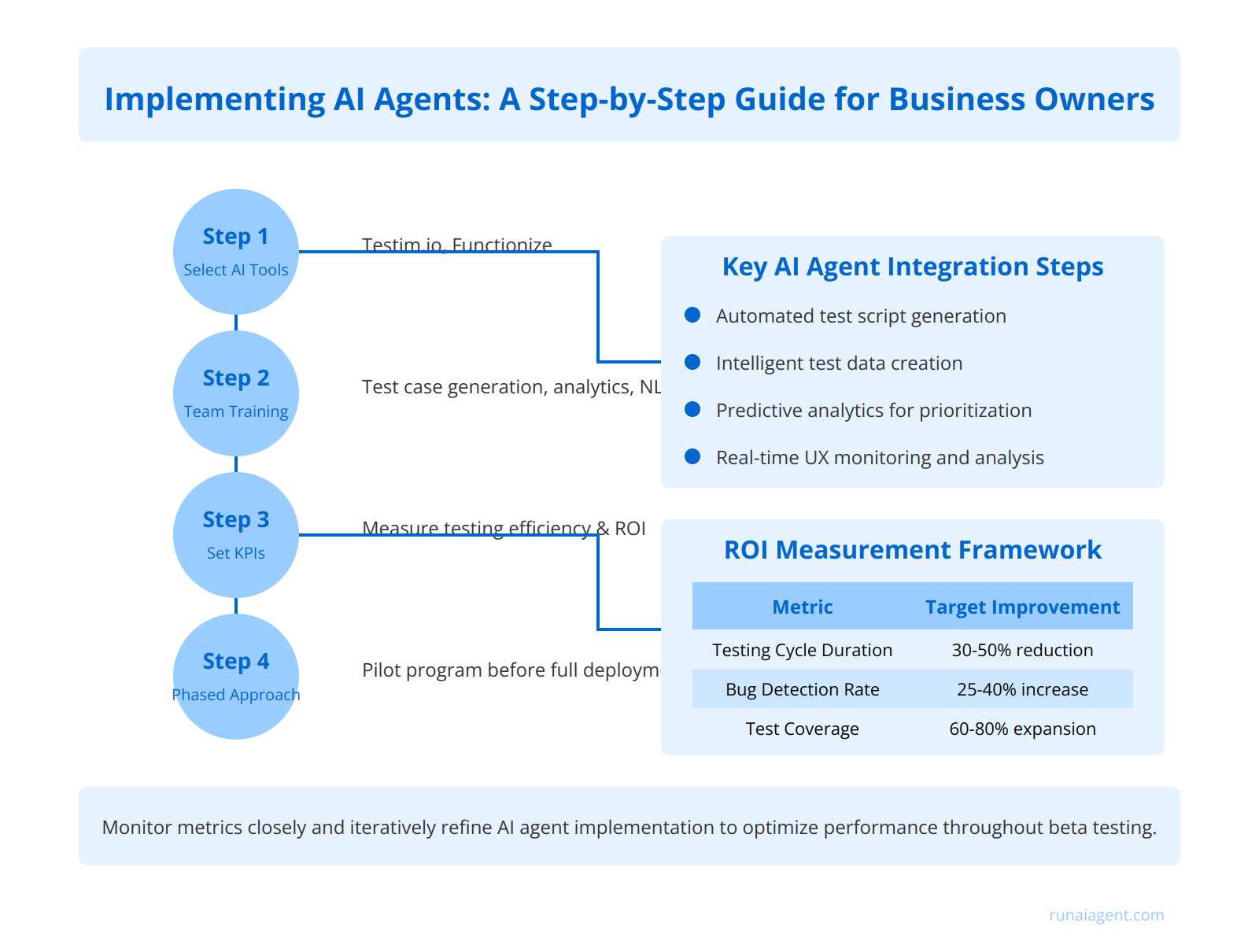

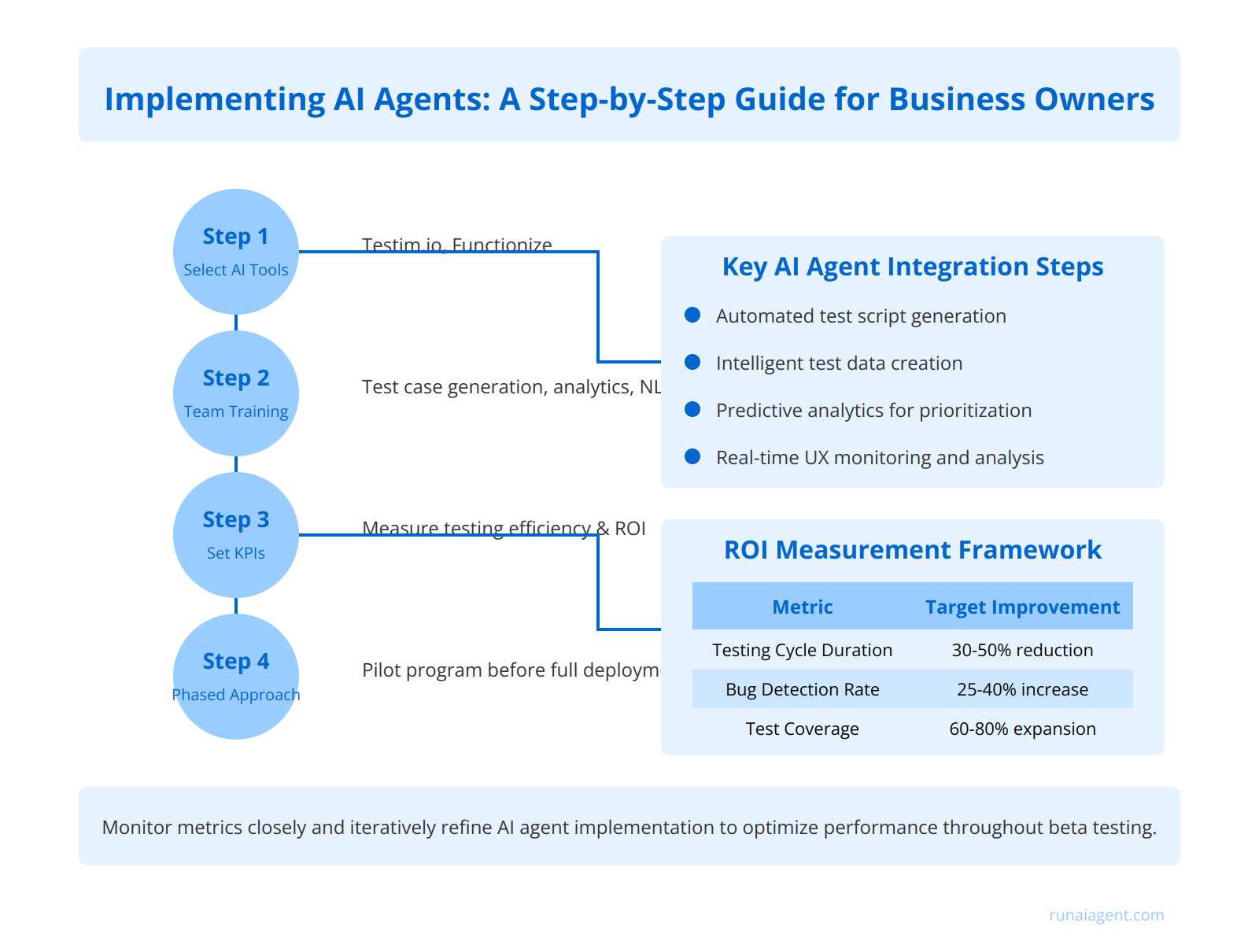

Implementing AI Agents: A Step-by-Step Guide for Business Owners

To seamlessly integrate AI agents into existing beta testing processes, business owners should follow a structured roadmap that maximizes efficiency and ROI. Begin by selecting AI tools tailored for beta testing, such as Testim.io or Functionize, which offer advanced machine learning capabilities for test automation. Next, invest in comprehensive team training, focusing on AI-driven test case generation, predictive analytics for bug detection, and natural language processing for user feedback analysis. Establish clear KPIs to measure ROI, including reduced testing cycles (typically 30-50% faster), increased bug detection rates (often improved by 25-40%), and enhanced test coverage (frequently expanded by 60-80%). Implement a phased approach, starting with a pilot program on a subset of features before full-scale deployment. Utilize AI agents for:

Key AI Agent Integration Steps:

- Automated test script generation and maintenance

- Intelligent test data creation and management

- Predictive analytics for test prioritization

- Real-time user experience monitoring and analysis

ROI Measurement Framework:

| Metric | Target Improvement |

|---|---|

| Testing Cycle Duration | 30-50% reduction |

| Bug Detection Rate | 25-40% increase |

| Test Coverage | 60-80% expansion |

Monitor these metrics closely and iteratively refine the AI agent implementation to optimize performance and deliver tangible business value throughout the beta testing lifecycle.

Future-Proofing Your QA: The Long-Term Impact of AI on Beta Testing

As artificial intelligence continues to revolutionize software development, the landscape of beta testing is undergoing a profound transformation. AI-driven testing methodologies are rapidly evolving, promising to reshape how businesses approach quality assurance in the coming years. Predictive analytics and machine learning algorithms are enabling AI agents to anticipate potential bugs and user experience issues with unprecedented accuracy, reducing the need for extensive manual testing cycles by up to 60%. These advanced AI systems are not only identifying defects but also suggesting optimal fixes, streamlining the debugging process and potentially cutting time-to-market by 30-40%.

Emerging Trends in AI-Driven Testing

The integration of natural language processing (NLP) in AI testing agents is facilitating more intuitive interactions between developers and testing systems. This advancement allows for the creation of test scenarios using everyday language, democratizing the testing process and enabling non-technical stakeholders to contribute meaningfully to quality assurance efforts. Additionally, the rise of autonomous exploratory testing is empowering AI agents to navigate complex software environments independently, uncovering edge cases and rare bugs that human testers might overlook.

Potential Advancements in AI Agent Capabilities

Looking ahead, AI agents are poised to leverage quantum computing for exponentially faster processing of test data, potentially reducing comprehensive test suite execution times from days to minutes. The emergence of self-healing test scripts promises to address the perennial challenge of test maintenance, with AI agents dynamically updating test cases to reflect changes in application code or user interfaces. Furthermore, the integration of emotional intelligence into AI testing frameworks could revolutionize user experience evaluation, allowing for nuanced assessment of software ergonomics and emotional impact.

Preparing for the Evolving QA Landscape

To future-proof their QA processes, businesses must invest in scalable AI infrastructure capable of handling increasingly complex testing scenarios. This includes building robust data pipelines to feed AI models with high-quality, diverse test data, ensuring comprehensive coverage across varied user personas and usage patterns. Companies should also focus on upskilling their QA teams, transitioning from traditional manual testing roles to AI orchestration and results interpretation. Embracing a

“continuous intelligence”

mindset will be crucial, where AI-driven insights continuously inform and refine the development process, creating a feedback loop that drives perpetual product improvement.

| AI Testing Advancement | Potential Impact |

|---|---|

| Predictive Analytics | 60% reduction in manual testing |

| NLP Integration | 50% increase in stakeholder engagement |

| Quantum-powered Testing | 95% decrease in test execution time |

FAQ: Demystifying AI Agents in Beta Testing

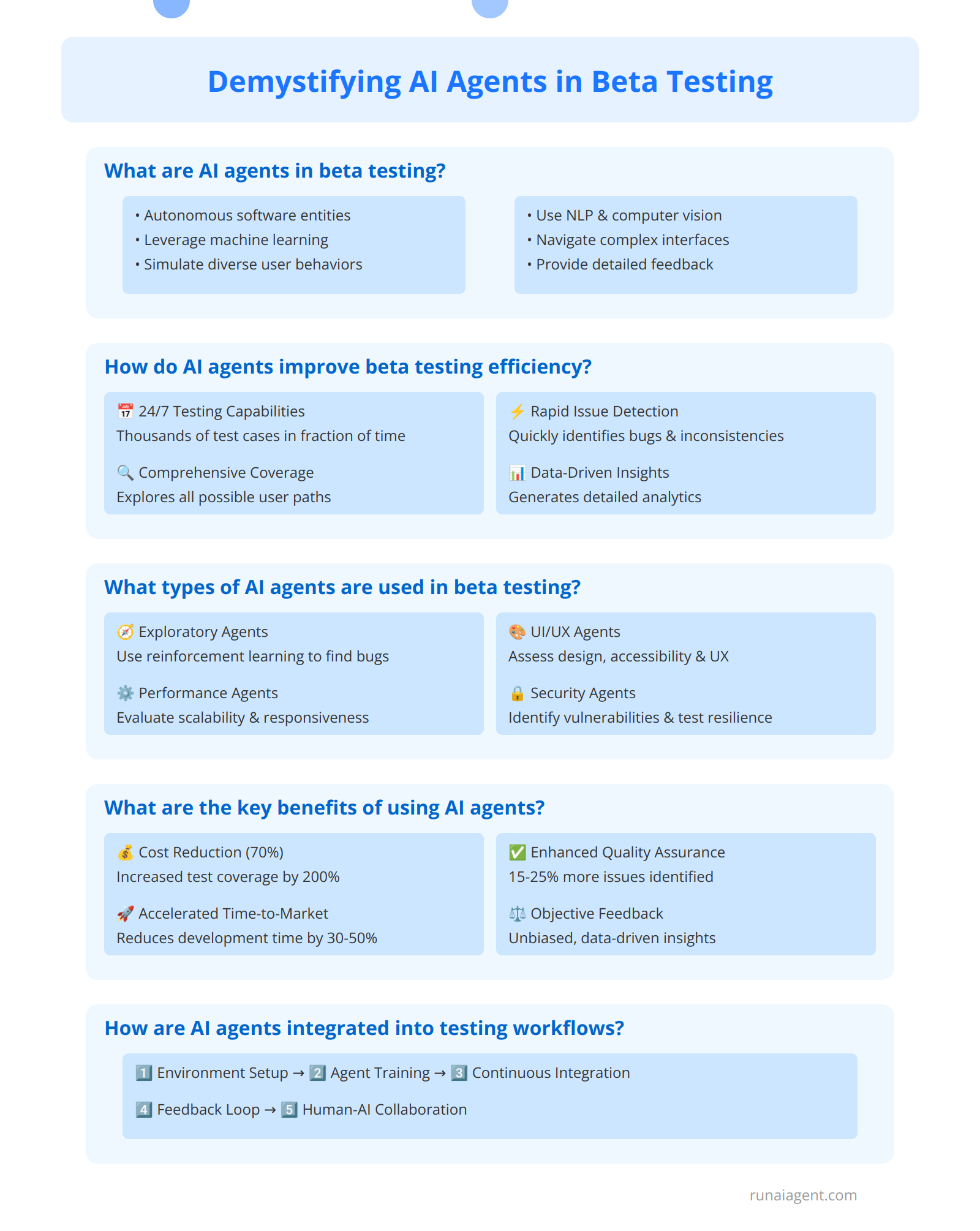

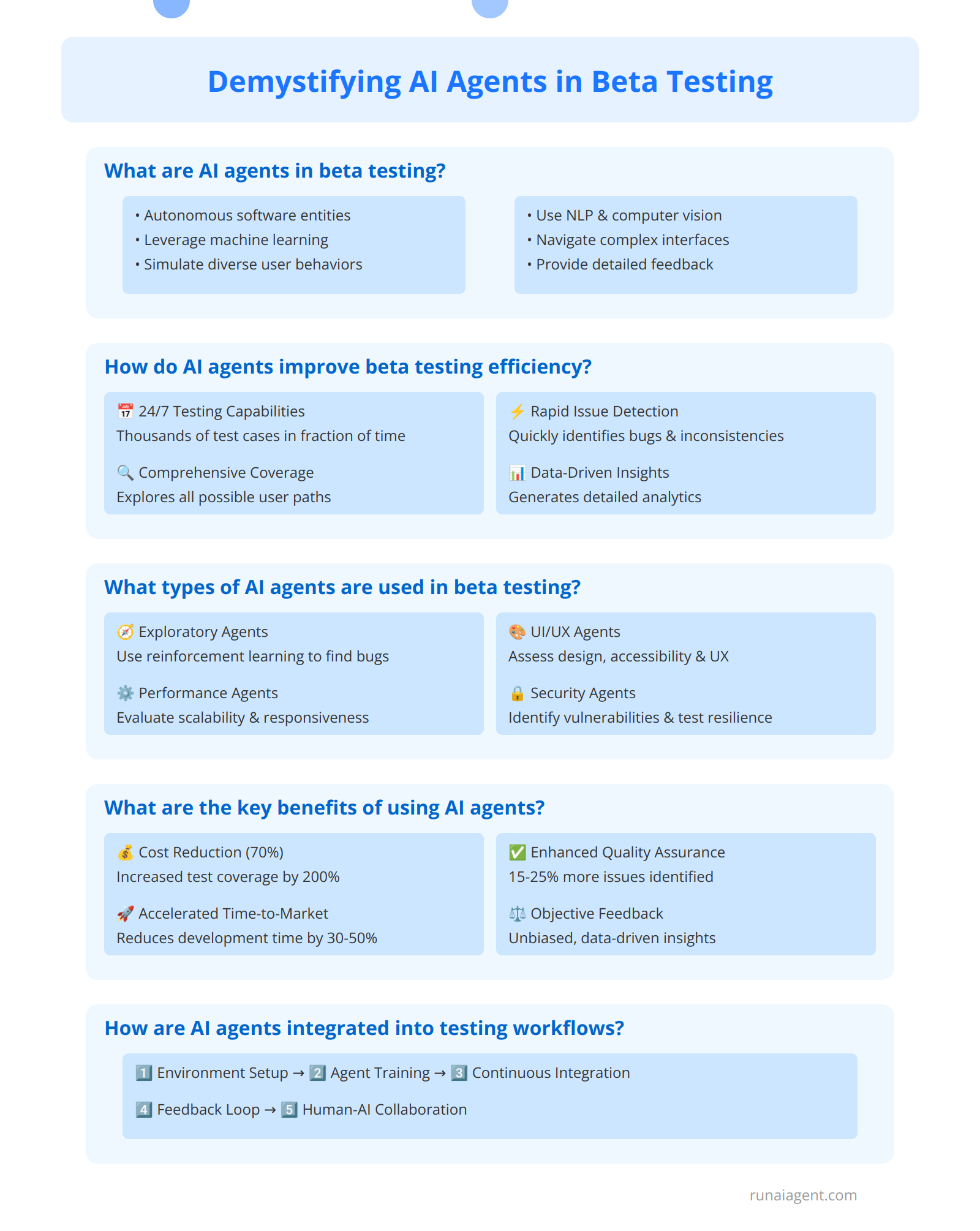

What are AI agents in beta testing?

AI agents in beta testing are advanced software entities that leverage machine learning algorithms to autonomously explore, interact with, and evaluate software applications during pre-release phases. These intelligent agents simulate diverse user behaviors, identify edge cases, and uncover potential issues that human testers might miss. Utilizing natural language processing (NLP) and computer vision, AI agents can navigate complex user interfaces, execute test scenarios, and provide detailed feedback on functionality, performance, and user experience.

How do AI agents improve beta testing efficiency?

AI agents significantly enhance beta testing efficiency through:

- 24/7 Testing Capabilities: AI agents can operate continuously, executing thousands of test cases in a fraction of the time required by human testers.

- Comprehensive Coverage: They systematically explore all possible user paths and interactions, ensuring thorough testing of the application.

- Rapid Issue Detection: Advanced anomaly detection algorithms allow AI agents to quickly identify and flag potential bugs or inconsistencies.

- Data-Driven Insights: AI agents generate detailed analytics on application performance, user engagement metrics, and potential areas for optimization.

What types of AI agents are used in beta testing?

Several types of AI agents are employed in beta testing:

- Exploratory Agents: These use reinforcement learning to navigate applications and discover unexpected behaviors or bugs.

- Performance Agents: Specialized in stress testing and load simulation to evaluate application scalability and responsiveness.

- UI/UX Agents: Employ computer vision and NLP to assess interface design, accessibility, and user experience factors.

- Security Agents: Focus on identifying potential vulnerabilities and testing the application’s resilience against various attack vectors.

What are the key benefits of using AI agents in beta testing?

Implementing AI agents in beta testing offers numerous advantages:

- Cost Reduction: AI agents can reduce manual testing costs by up to 70% while increasing test coverage by 200%.

- Accelerated Time-to-Market: Continuous testing enables faster iteration cycles, reducing overall development time by 30-50%.

- Enhanced Quality Assurance: AI agents consistently identify 15-25% more critical issues compared to traditional testing methods.

- Scalability: AI agents can easily scale to accommodate testing needs for applications of any size or complexity.

- Objective Feedback: AI-generated insights provide unbiased, data-driven feedback for informed decision-making.

How are AI agents integrated into existing beta testing workflows?

Integration of AI agents into beta testing workflows typically involves:

- Environment Setup: Configuring test environments to support AI agent deployment and interaction.

- Agent Training: Utilizing historical test data and application specifications to train AI models for specific testing scenarios.

- Continuous Integration: Implementing AI agents within CI/CD pipelines for automated testing at each development stage.

- Feedback Loop: Establishing mechanisms for AI agents to report findings and for development teams to act on insights.

- Human-AI Collaboration: Defining processes for human testers to validate and supplement AI-generated test results.